Leverage feels like losing control: that’s the point, and the path

FAVOR: If you enjoy our content, please subscribe here on substack, as well as our Podcast, PossibLaw on the various platforms. We greatly appreciate it!

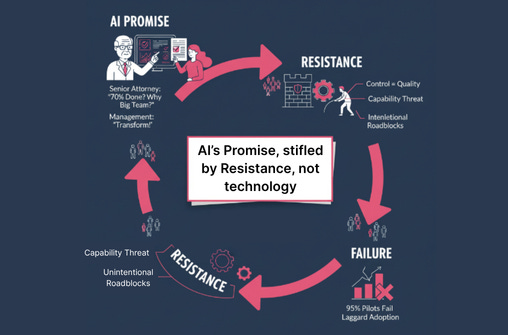

There is a repeating story spreading through legal. This idea of a senior attorney, seeing the output of AI, and asking: “If this AI can do 70 percent of my team’s work, why do I need a huge team (legal department)? or what am I now selling (law firm)?” So management pushes ahead with transformation. And yet, we see supposed 95% failure rates in AI pilots and laggard adoption in production. Its not surprising when you dig in past the ability of the technology. In legal, control masquerades as quality. And anything that spreads capability from the few to the many looks like a threat. So you get both intentional and unintentional slowdown.

What does this look like in practice?

A legal team pilots an AI tool for intake triage and drafting. Early wins pile up. Then the brakes hit. Approval chains grow. “Human-in-the-loop” turns into five humans. A risk memo multiplies into a policy labyrinth. The initiative doesn’t die; it starves.

Why? Not because the technology is weak. Because leverage redistributes control. Standard prompts, shared clause libraries, and auto-QA tilt power away from bespoke judgment and toward repeatable systems. If your status is tied to being the one who knows which clause “sings,” a model that suggests that clause feels like a demotion.

The stakes are real. Slow-walking AI means slower matters, thinner margins, and juniors stuck in busywork that teaches the wrong lessons. Meanwhile, cycle times fail to decrease, your C-Suite and clients are irritated, and nothing improves. Whether your client is the legal department’s business, or your a law firm full of business clients across industries, the end user doesn’t care that you preserved a lawyer’s sense of authorship; they care that you met the deadline, reduced risk, and moved the ball forward.

The Fix: name the real constraint.

The blocker isn’t accuracy. It’s identity. Control signals expertise, ownership, and billable gravity. When leverage shows up, it looks like loss: fewer keystrokes, fewer approvals, fewer private piles of know-how. The fix isn’t to argue people out of ego. It’s to route ego, incentives, and risk toward the system outcome you want.

Framework → Leverage: compounding beats heroics.

Standardize the end state: define “done” for each artifact with acceptance criteria, redline thresholds, and QA checks that models must pass.

Centralize reusable assets: vetted prompts, clause packs, exemplars, and evaluation datasets in one governed library.

Instrument everything: log prompts, diffs, and outcomes so wins become data, not anecdotes.

Takeaway:

Control feels safe; leverage compounds. Decide, design, deliver.