The myth of “agnostic surveys” in legal tech

FAVOR: If you enjoy our content, please subscribe here on substack, as well as our Podcast, PossibLaw on the various platforms. We greatly appreciate it!

A GC showed me a glossy vendor “comparison” and said, “We’ll just pick the top quadrant.” Their team had a backlog of NDAs, a messy intake process, and no single source of truth. The survey never asked about any of that. It felt safe. It was also useless.

What looks neutral from afar is biased up close. In legal, we keep outsourcing judgment to vendor lists, panels of other lawyers, or a subreddit thread. It feels objective. It keeps us from making a call. And in the AI flood, that habit is turning into real risk.

What “agnostic” often hides

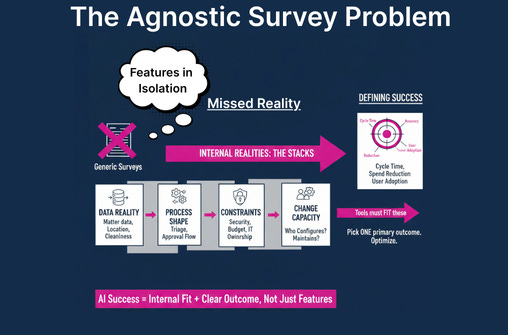

Most surveys measure features in isolation. Reality is stacks, not features. The value comes from fit. A good decision starts with your end state, not a vendor matrix.

Data reality. What matter data do you have today? Where does it live? How clean is it? AI tools depend on this. If your corpus is scattered, a top model will underperform.

Process shape. Where do requests enter? Who triages? What gets auto-approved? Tools should reinforce, not fight, this flow.

Constraints. Security, budget, procurement rules, and IT ownership are not footnotes. They are the rails.

Change capacity. Who will configure, train, and maintain? If the answer is “the same two overworked ops folks,” buy accordingly.

Success metric. Cycle time, accuracy, outside counsel spend, user adoption. Pick one primary outcome and optimize for that.

None of these show up in a generic “agnostic survey.” They live inside your department.

The friction you’re not naming

Copying another department’s stack is attractive because it avoids conflict and speeds the meeting. But it ignores four realities:

Your data is different. Volume, structure, languages, privilege patterns, clause libraries, redline history. Tools perform on what you feed them.

Your outcomes differ. Are you optimizing for cycle time, risk posture, outside counsel spend, or auditability? “Top rated” is not a goal.

Your processes vary. Hand-offs, approvals, and matter triage shape what actually gets adopted.

Incentives distort the map. Vendors sell what they have. Many “agnostic” reviews are partner ecosystems in disguise. Even well-meaning peers are optimizing for their world, not yours.

If the rest of the business hires domain-savvy consultants to translate strategy into architecture, why do legal leaders expect a survey to do systems design?

The consultant question

Let’s be real. Some consultants are just channel resellers in nicer jackets. They optimize for their partner network and succeed when you buy licenses. But there are practitioner-grade advisors who sit on your side of the table. They measure success in adoption, not SKUs. The rest of the business uses them because they compress the path from strategy to execution. Legal should too.

What good advisors do:

Work backward from outcomes. They force a single sentence: “In 90 days we will reduce intake-to-first-touch from 9 days to 2.”

Map process to data. They draw the current path, highlight friction, and identify where tech creates leverage.

De-risk the build. They stage pilots, instrument metrics, and define an exit if value does not show up.

Negotiate with context. They know which features are real, which are roadmap, and where to push on price and support.

Protect identity and incentives. They keep you from buying a brand to impress peers rather than solve work.

A quick example

Contract AI that looks brilliant on paper

Team A selects a “top” review tool because a survey says its models are strongest. In pilot, it fails quietly. Why? Their clauses are non-standard, negotiated in two languages, and stored across SharePoint sprawl. The model was fine. The throughput died on permissions, data mapping, and missing fallback clauses. The fix was not “better AI.” The fix was a clause library, cleaned repositories, and an approval matrix the tool could actually read.

The reframe

You are not buying features. You are buying future operating leverage. Surveys tell you what is shiny. Your data, outcomes, and process tell you what will compound.

Stop asking “What’s the best tool?” Start asking “What does our system need to achieve, and what is the cheapest reliable way to get there?”

Take Action

Surveys are inputs, not decisions. Peers are reference points, not roadmaps. Vendors are partners, not architects. If you want leverage, anchor the choice in your data, your process, and your outcomes. That is how you reduce friction, avoid shelfware, and amplify impact. Stop tweaking. Start transforming.