Train the Thinker, Not the Box

Thanks for reading & listening to PossibLaw. If you like what you see and hear, I encourage you to subscribe. We are a small team working to help the legal industry grow transform.

The GC paused, looked at the vendor’s glossy slide, and said the quiet part out loud. “So you’re telling my lawyers not to learn this because your model will think for them.” The room nodded. Cheaper. Faster. No homework.

That is the seduction. It is also the trap.

In law, the model is not the point. Judgment is.

The real black box is not the model. It is our training gap.

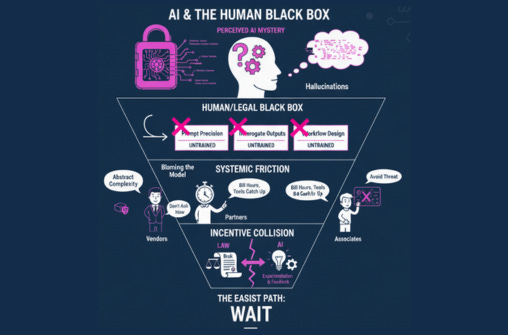

We talk about AI like it is a mysterious vault. Parameter counts. Hidden layers. Hallucinations. Fine. But there is a second black box hiding in plain sight. It is the human one. We have not trained lawyers to prompt with precision, to interrogate outputs, or to design workflows that make the right use of a probabilistic system. So we blame the model when the process was never taught.

Vendors encourage it. “We abstract away the complexity.” Translation: please do not ask how this works, and please do not measure it against how you think. Partners reinforce it. “Bill your hours, the tools will catch up.” Translation: your value is time spent, not decisions made. Associates internalize it. “If the model is a threat, I should avoid it.” Translation: if I do not look, it cannot replace me.

The friction is structural. Law rewards risk transfer and precedent. AI demands experimentation and feedback. Those incentives collide, and the easiest path is to wait.

What actually matters in legal work

Framing the question. Most legal errors start here. AI cannot rescue a bad question.

Decomposing the task. Research, synthesis, options, implications, then drafting. Tools are sharp at some steps, blunt at others.

Adversarial review. You must try to break your own work. The model will not tell you where it is weak unless you ask in the right way.

Decision under uncertainty. Clients pay for conclusions with risk ranges, not endless memos.

Training targets these muscles. The model amplifies what exists.

If we train nothing, it amplifies nothing.

Two quick scenes

1) The in-house team and the “magical” brief builder

A Fortune 500 law department licensed an AI drafting tool. Adoption flatlined. Lawyers pasted prompts into a single box, accepted the first output, then quit when it sounded generic. The issue was not the model. The issue was no one taught a 10-minute workflow: outline first, then targeted prompts for sections, then source-anchored quotes, then a contradiction pass. Once trained, average drafting time dropped 35 percent. Quality improved because the humans learned to drive.

2) The litigation associate and the cite check

An associate used AI to speed a cite check. It hallucinated two cases. She caught both because her checklist forced a “verify in database, not in the model” step. Partners now think she is a wizard. She is not. She is trained. The tool made her faster. The process made her safer.

Why waiting is the riskiest move

Leverage: Early movers compound advantage. Saved hours, reusable prompts, internal precedent libraries. The learning curve pays interest.

Incentive: Vendors profit from abstraction. Firms profit from hours. Clients profit from outcomes. Only one party signs your review. Align with the client.

Friction: Approval queues, procurement policies, data access. None of these teach a lawyer to think with a model.

Ego: “If this is easy, what am I for.” You are for judgment. Tools do not steal that. They surface options so you can decide.

Stop tweaking the tool list. Start transforming the training.

A simple training blueprint that respects legal reality

Week 1: Questions and constraints

Write three client-style prompts for the same issue, each with a different constraint: time, jurisdiction, audience.

Compare outputs. Collect what changed and what stayed flat.

Debrief: what the model is good at, what it misses, what you must supply.

Week 2: Task decomposition

Break one complex matter into five steps. Assign a model role to each step.

Use checklists that force verification outside the model for facts and citations.

Capture reusable prompts in a shared folder with examples and edge cases.

Week 3: Adversarial review

Stress test your own draft. Ask the model to argue the other side, to find conflicts, to enumerate missing authorities.

Build a “red team” script you run before any deliverable goes to a client.

Week 4: Decision and client communication

Use the model to generate options with pros and cons, then write the recommendation yourself.

Practice a one-page client summary: question, options, recommendation, risk range, next step.

Repeat the cycle on a new matter each month. Measure hours saved and errors prevented. Publish wins internally. Leverage compounds.

Guidelines for safe and effective use

Sources or it did not happen. Always ask for citations and verify in trusted databases.

Local facts beat generic answers. Feed the model your context, guard your confidentiality with approved tools.

One box is not a workflow. Outline, then sections, then critique, then integrate.

Your name is on the work. The model drafts. You decide.

The close

Legal work is thinking under constraint. Black boxes do not scare me. Untrained thinkers do. The choice is not “trust the model” or “ban the model.” The choice is “train the lawyer to aim the model.” Work backward from the end state. A clear opinion, a tighter memo, a faster path to a decision. Decide, design, deliver.

Thanks for reading & listening to PossibLaw. We hope you learned something actionable that will help you grow your mind and career. If you enjoyed today’s content please share it. Thank you!